MULTI-CLOUD STRATEGY IS A KEY TO DIGITAL TRANSFORMATION AIMED AT MODERNIZING PROCESSES

Deploying a multi-cloud strategy can lead to substantial benefits, while avoiding vendor lock-in. Here’s how you can do it right. For a growing number of enterprises, a migration to the cloud is not a simple matter of deploying an application or two onto Amazon Web Services, Microsoft Azure, or some other hosted service. It’s a multi-cloud strategy that’s a key part of a digital transformation aimed at modernizing processes.

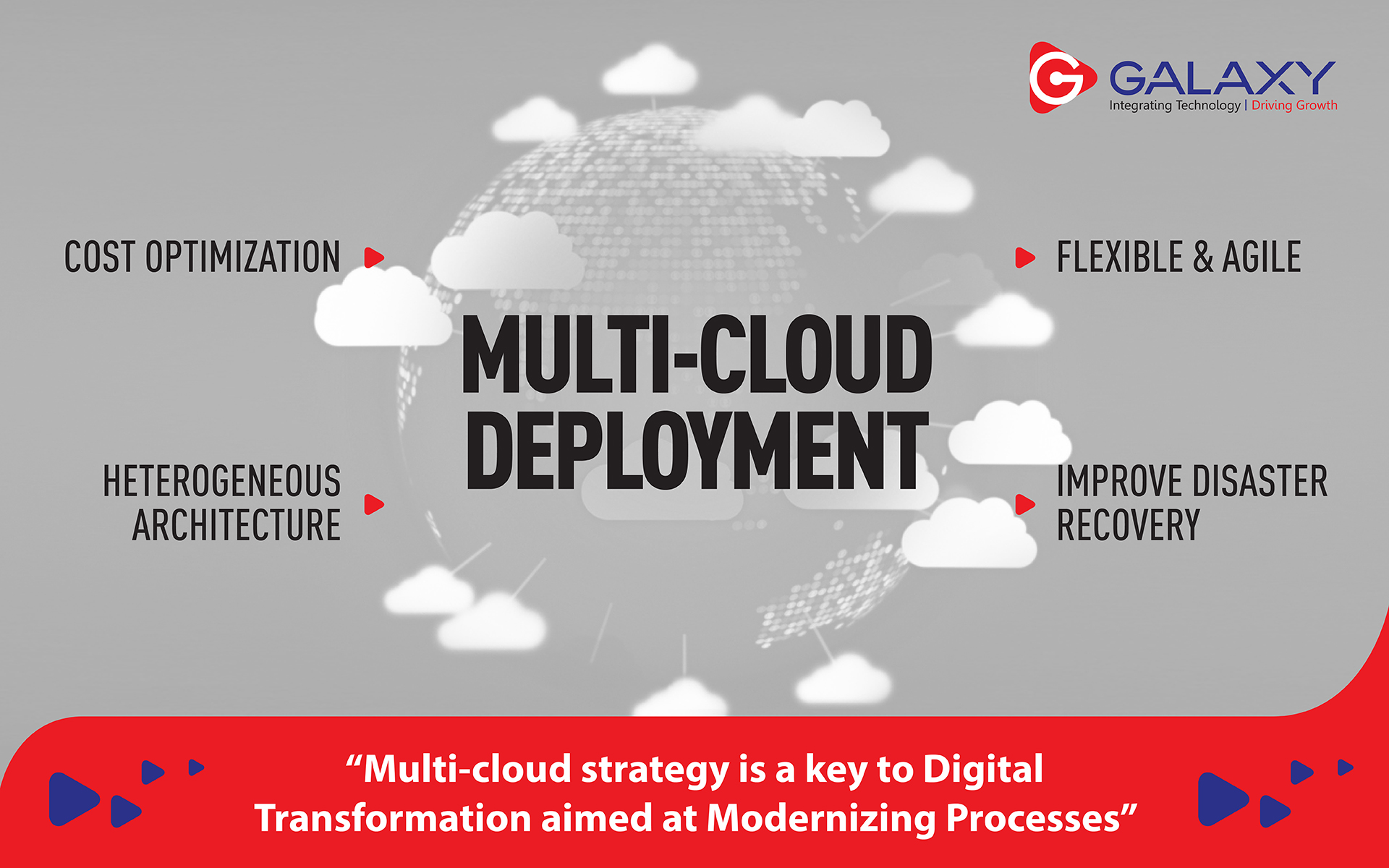

Benefits of Deploying a multi-cloud

1. Using multiple cloud computing services such as infrastructure-as-a-service (IaaS), platform-as-a-service (PaaS), and software-as-a-service (SaaS) in a single heterogeneous architecture offers the ability to reduce dependency on any single vendor.

2. It can also improve disaster recovery and data-loss resilience, make it easier to exploit pricing programs and consumption/loyalty promotions, help companies comply with data sovereignty and geopolitical barriers, and enable organizations to deliver the best available infrastructure, platform, and software services.

3. Cost optimization is a huge benefit. It’s not so much that you are spending less by going multi-cloud, but rather you can manage risk far better.

4. Flexible & Agile: Having multiple clouds “makes you more flexible and agile, allows for the adoption of best-of-breed technologies, and provides far better disaster recovery. One has the flexibility to run certain applications in a private environment, and others in a public environment, while keeping everything connected. Cloud service providers have the right skill sets to make this all happen so that customers don’t have to maintain this expertise in house.”

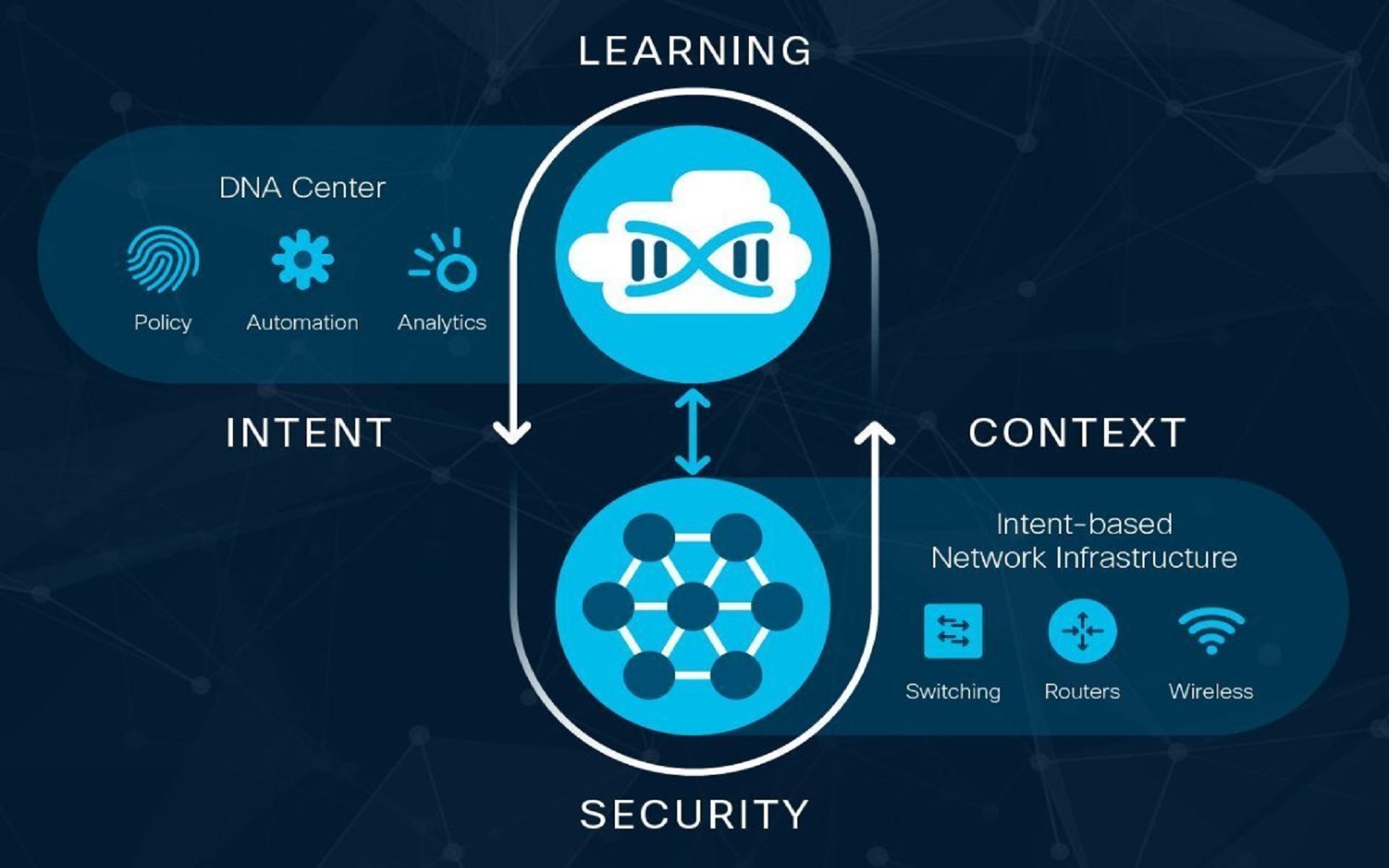

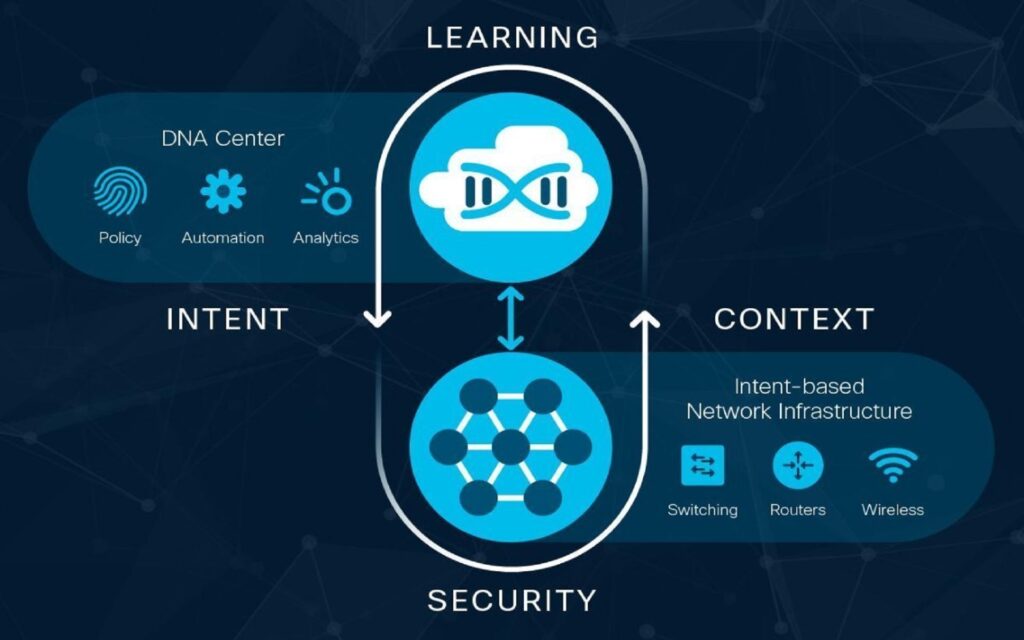

Like any other major IT initiative, ensuring an effective multi-cloud strategy involves having the right people and tools in place, and taking the necessary steps to keep the effort aligned with business goals. A multi-cloud deployment adds complexities that require organizations to develop a deep understanding of the services they’re buying and to perform due diligence before plunging ahead, Due diligence includes planning. Use a cloud adoption framework to provide a governing process for identifying applications, selecting cloud providers, and managing the ongoing operational tasks associated with public cloud services, educate all staff on the cloud adoption framework and the details of using selected CSPs [cloud service providers] architecture, services, and tools available to assist in the deployment. Moving to a multi-cloud environment might present risks that were not present in current applications and systems, check for new risks and identify any new security controls needed to mitigate these risks, Use CSP-provided tools to check for proper and secure usage of services. A company’s infrastructure should be treated as source code and change control procedures should be enforced. Procedures will need to address differences in CSPs implementations. Decommissioning of services is also part of due diligence. The most important part of any application or system to the organization is the data stored and processed within. Therefore, it is critical to understand how the data can be extracted from one CSP and moved to another. When relying on multiple cloud services to deliver business applications to customers and internal users, having strong integration between services is vital. Put the right APIs [applications programming interfaces] in place so that systems can work together to create a seamless user experience, with no lags or delays in service.

Manage access and protect data:

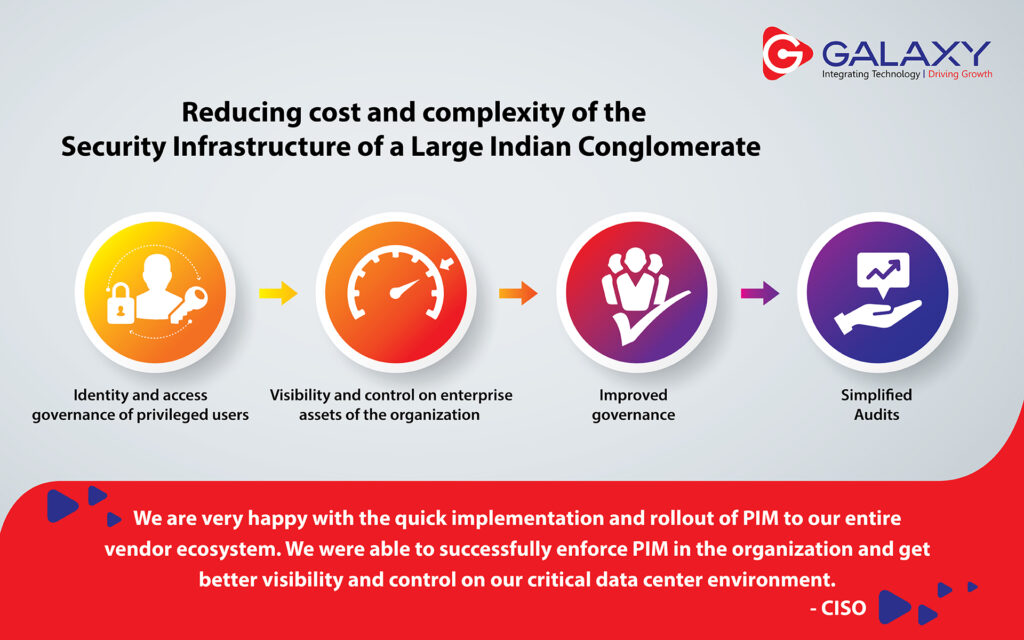

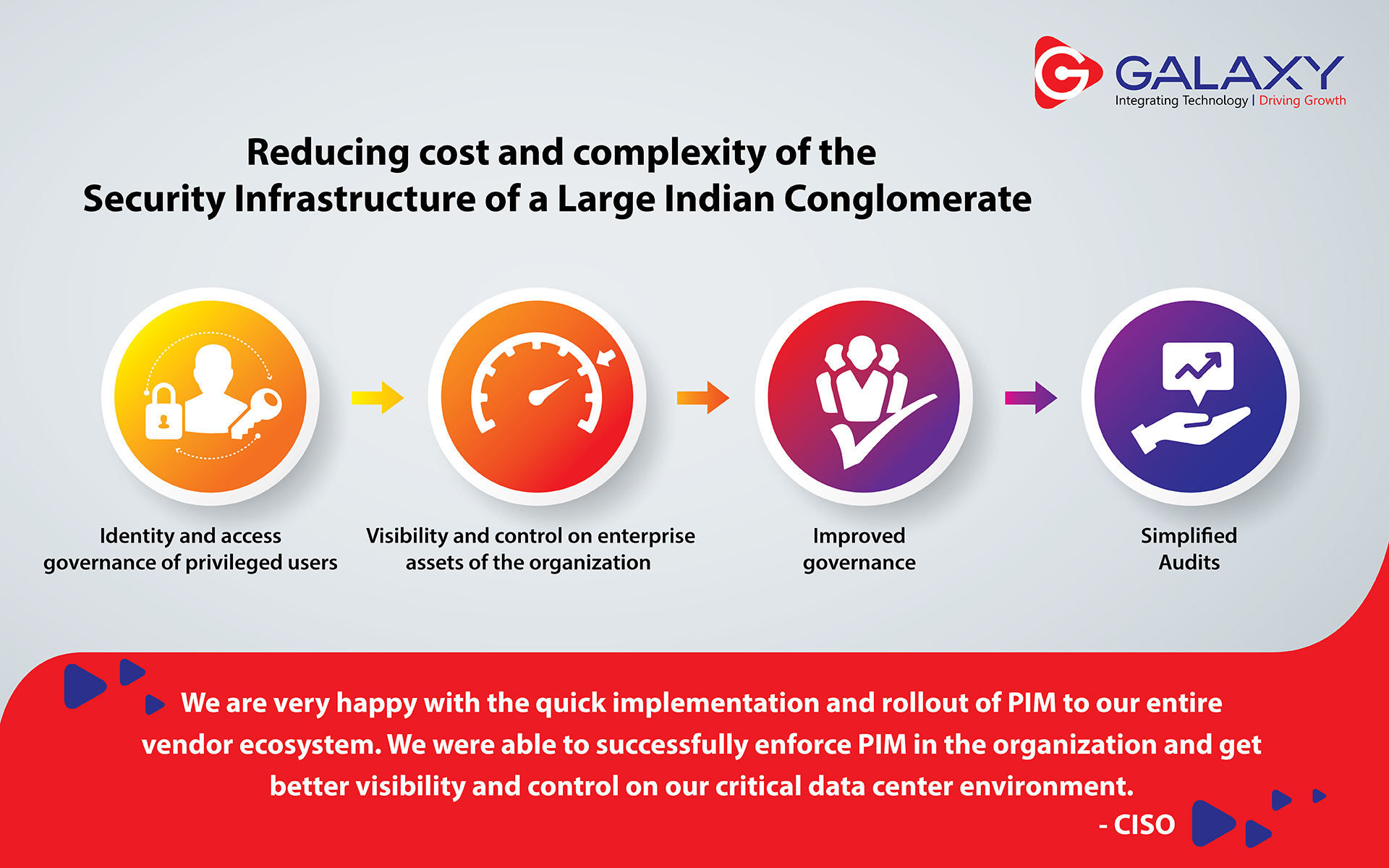

Using multiple cloud services, including a mix of public and private clouds, presents a host of security challenges. A key to ensuring strong security is identifying and authenticating users. Use multifactor authentication across the multiple CSPs to reduce the risk of credential compromise.

Organizations should also assign user access rights. That includes creating a collection of roles to fill both shared and user-specific responsibilities across the multiple clouds, Companies will need to investigate the differences in how role-based access control could be implemented with selected CSPs. Another good practice is to create and enforce resource access policies. CSPs over various types of storage services, such as virtual disks and content delivery services. Each of these might have unique access policies that must be assigned to protect the data they store. Protecting data from unauthorized access is vital. This can be achieved by encrypting data at rest to protect it from disclosure due to unauthorized access across all CSPs. Companies need to properly manage the associated encryption keys to ensure effective encryption and the ability to operate across CSPs. It’s also important to ensure that each CSPs data backup and recovery process meets your organization’s needs, Companies might need to augment CSPs processes with additional backup and recovery. Keep an eye on cost: One of the biggest selling points of the cloud is that it can help organizations reduce costs through more efficient use of computing resources. Services are paid for on an on-demand basis, and the cost of buying and maintaining numerous servers is eliminated.

Nevertheless, in a multi-cloud environment it’s easy to lose track of costs that can then get out of control. Carefully consider the cost of managing multi-cloud environments, including human capital costs associated with maintaining multi-cloud competencies and expertise, as well as costs associated with administrative control, integration, performance design, and the sometimes-difficult task of isolating and mitigating issues and defects.

However, leveraging service provider-specific capabilities can lead to Vendor Lock -in, so consider the value and commitment of these choices. Not all applications and compute needs are created equally, and as such, it’s not possible to pick a single cloud platform or strategy that meets all your needs. In general, a multi-cloud strategy provides flexibility and leverage. Having multiple [providers] enables you to not be locked into any one, gives you the benefit of innovation and price negotiation. “To fully realize the benefits of multi-cloud, such as workload portability, you must consider your architecture. For example, deploying applications via containers allows for portability.

Blog Credit – Mukesh Choithani – AVP – DataCenter, Galaxy Office Automation Pvt Ltd

FOR A FREE CONSULTATION, PLEASE FILL THIS FORM TO CONTACT US.